A few months ago I started diving deeper into Large Language Models (LLMs), trying to understand to what extent they could help me and other developers to improve our productivity.

To put this knowledge into practice, I decided to start an open-source project that combines two things that I enjoy working with AI and Kubernetes. From that mix, KoPylot was born.

GitHub - avsthiago/kopylot: Your Kubernetes AI Assistant written in Python

Why Kubernetes

Kubernetes is known as a tool with a steep learning curve. Mastering it requires a significant investment of time and effort.

When managing a cluster or deploying your application on Kubernetes, many things can go wrong. The network, the underlying infrastructure, and the interactions between the applications are just a few of the things that can break. And even when the application is working, you still have to walk the extra mile to make sure it is secure.

Given all this complexity, and the new advancements on AI, it just makes sense to me to try using AI to improve the lives of Kubernetes developers.

KoPylot features

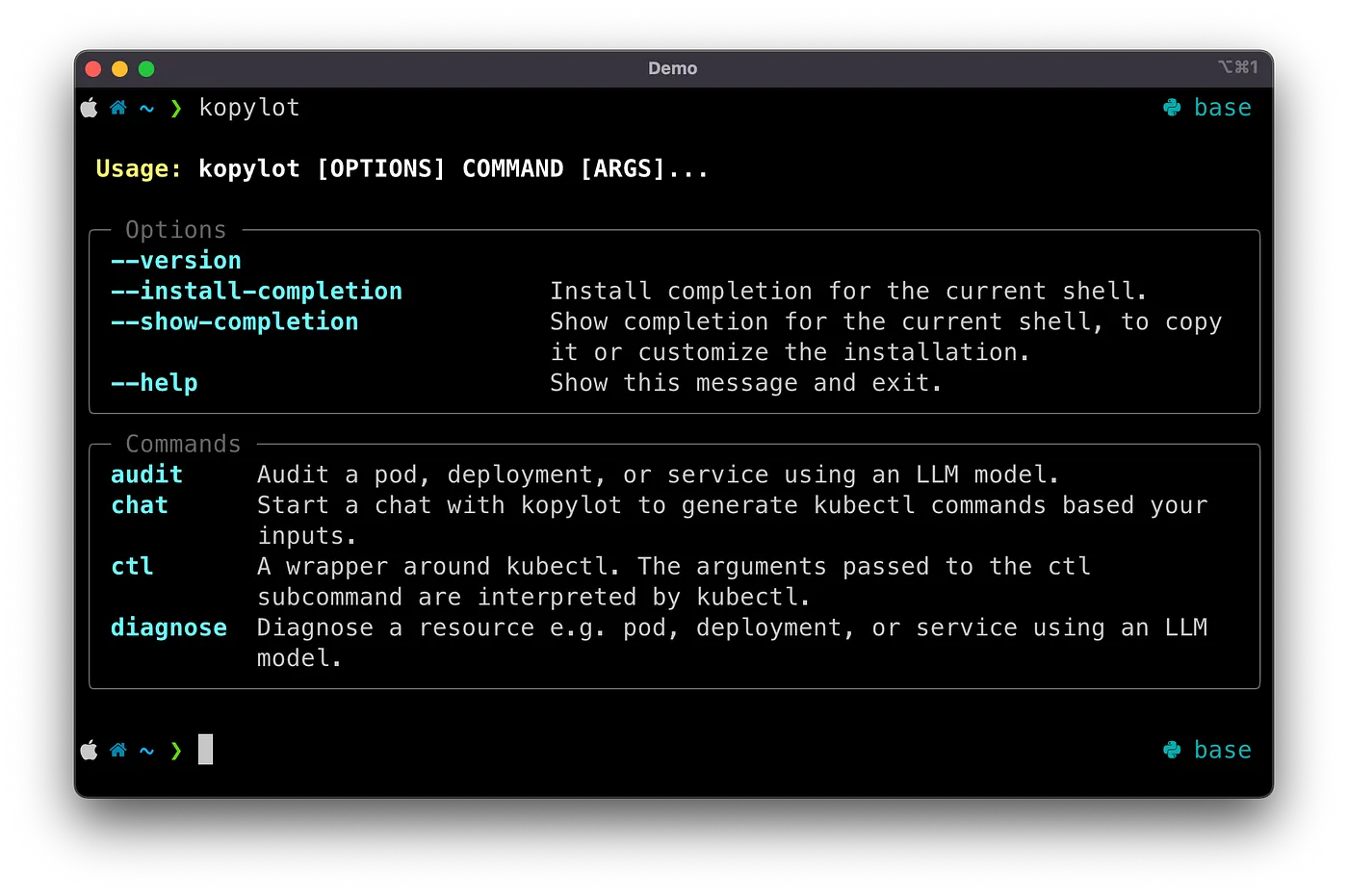

At the current version, KoPylot has four main features. These features can be translated into subcommands for the kopylot CLI. The subcommands are Audit, Chat, Ctl, and Diagnose. Let’s dive into these commands now.

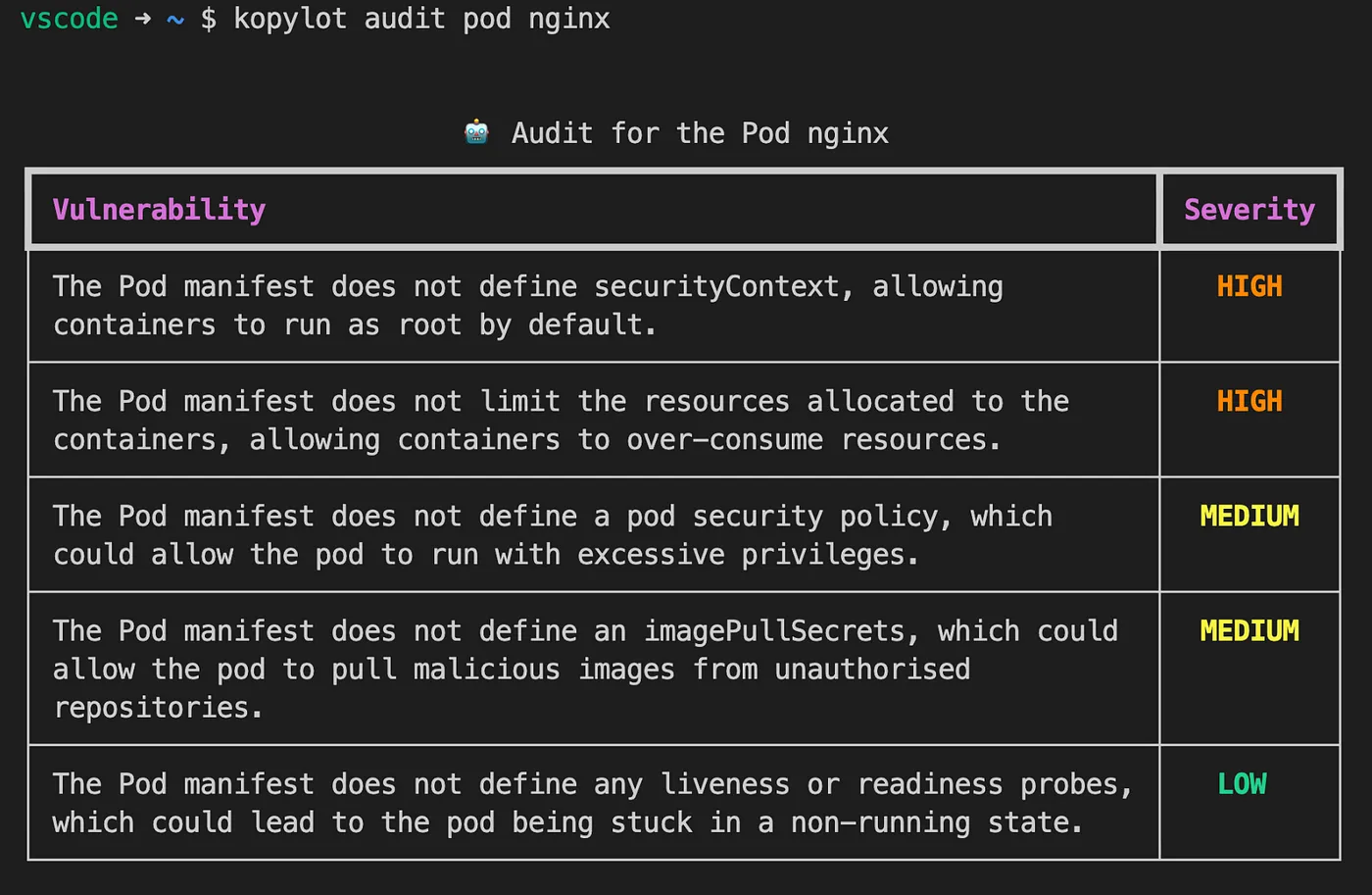

🔍 Audit:

Audit resources, such as pods, deployments, and services. KoPylot will take a single resource and look for vulnerabilities based on its manifest file.

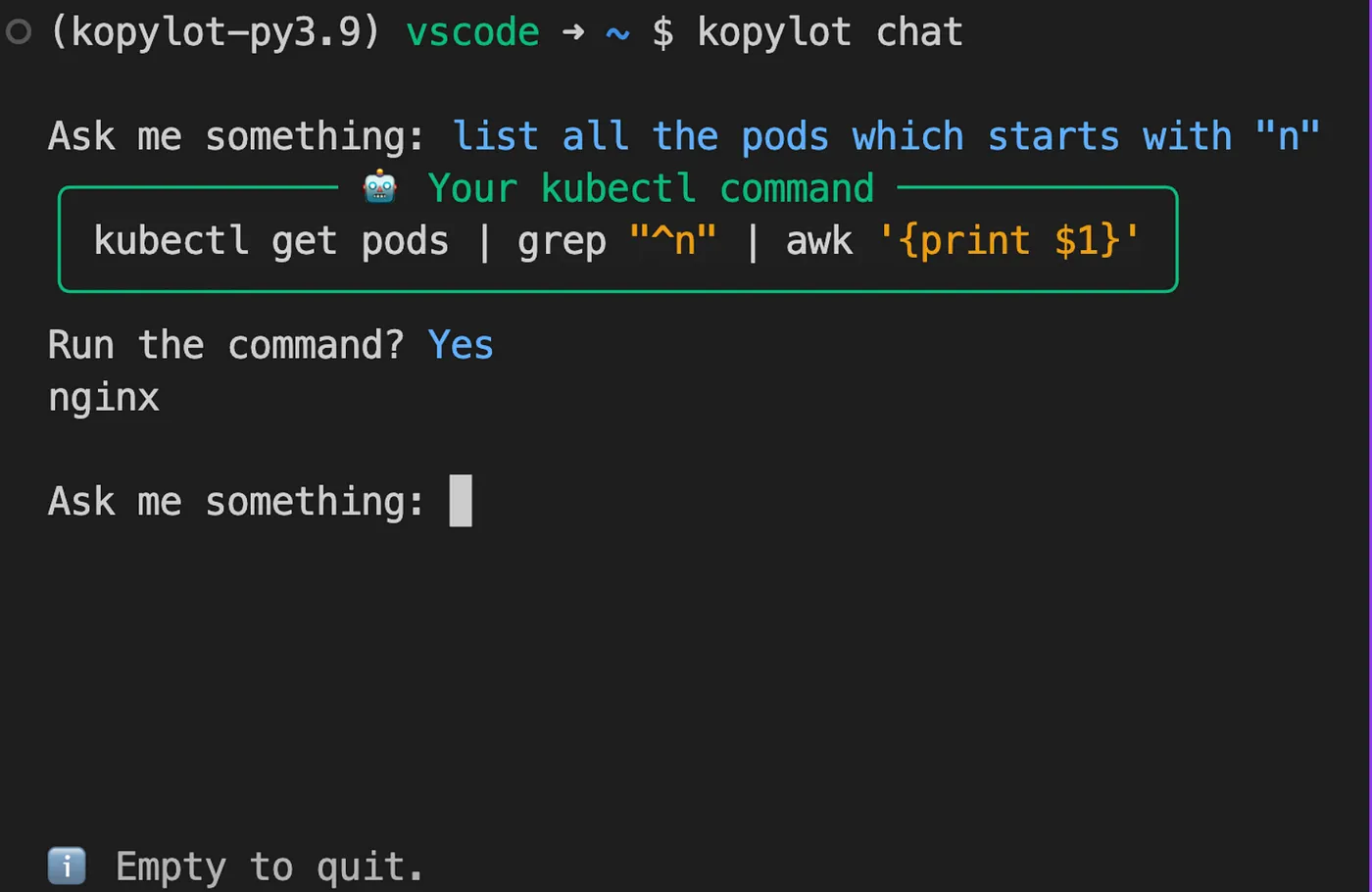

💬 Chat:

Ask KoPylot in plain English to generate kubectl commands. You will be able to review the command before running it 😉.

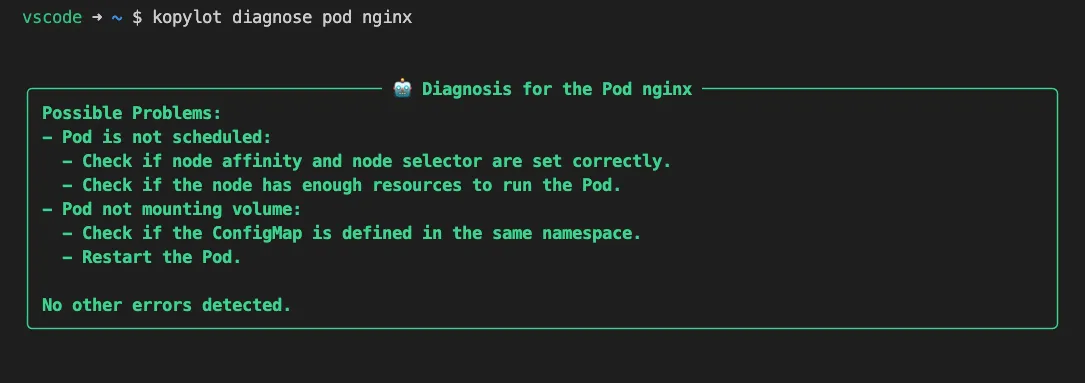

🩺 Diagnose:

You can use the diagnose tool to help you debug the different components of your application, such as pods, deployments, and services. The diagnose command will list for you possible fixes for the broken resource.

☸️ Ctl:

A wrapper around kubectl. All the arguments passed to the ctl subcommand are interpreted by kubectl.

How does KoPylot work?

At the moment, KoPylot works by extracting information from the Kubernetes resource description (kubectl describe …) or manifest and feeding it into OpenAI’s Davinci model together with a prompt. The prompt tells the model what to do with the Kubernetes resource.

The prompt is also responsible for guiding how the model should structure the output. For example, the prompt used for the Audit command asks the model to output the results as a two-column JSON containing the vulnerabilities and their Severities.

One of the goals in the roadmap is to make it possible to replace the OpenAI models by in-house hosted models. This would solve the issues of sending potentially sensitive data to OpenAI’s servers.

How good are the outputs?

I tested KoPylot on a dummy cluster with some broken pods, some with vulnerabilities and others without. What I noticed is that the Davinci model can give good directions when diagnosing a broken pod. Sometimes the advice will be too short for one to understand, but by running the diagnose command 2–3 times, it is possible to pinpoint the issue.

For the Chat command, I compared the outputs from Davinci and GPT-4. GPT-4 gave much better results from vague user prompts. So far I have only used GPT-4 via the ChatGPT UI, but I will definitely write a comparison once I get access to the API.

How can I use KoPylot?

You can use KoPylot by following the steps below:

- Request an API key from OpenAI.

- Export the key using the following command:

export KOPYLOT_AUTH_TOKEN=<your_openai_key> - Install Kopylot using pip:

pip install kopylot - Run Kopylot:

kopylot --help

How can I contribute?

The easiest way to contribute to KoPylot’s code is by cloning the repository from GitHub using git clone https://github.com/avsthiago/kopylot and then start the environment using a Dev Container as explained in the README file. I advise this approach because the Dev Container has a K3d Kubernetes cluster pre-installed, which makes testing much easier 😉.

Contributing by testing and reporting issues and bugs is also welcome. You can find more information about that in the CONTRIBUTING guide.

What is the next step for KoPylot?

In the next iterations, I’m planning to make it easier to integrate other LLMs into KoPylot such as GPT-3.5-turbo which can make the requests 10x cheaper.

I also plan to integrate LangChain into KoPylot. The idea here is to make it possible for KoPylos to perform more complex task on Kubernetes. For example, it could debug and solve issues in a cluster by itself (with some guardrails, of course).

What are the alternatives to KoPylot?

One of the similar projects that I found is the Kopilot from knight42. It is also a Kubernetes assistant which uses LLMs behind the scenes. The main difference from KoPylot is that it is written in Go. At the moment, it doesn’t have the Chat command implemented, but it can respond in different languages.

Conclusion

To sum up, my hope is that KoPylot will make the lives of developers easier. Debugging issues on a Kubernetes cluster can be a time-consuming process that nobody should have to endure for hours or days on end when there are faster alternatives available.

Additionally, I’m looking forward to integrating KoPylot with open-source LLMs in the future, giving companies the option to use LLMs hosted on their own infrastructure.

I hope you enjoyed this post. If you have questions, you can find me on LinkedIn. see you next time!