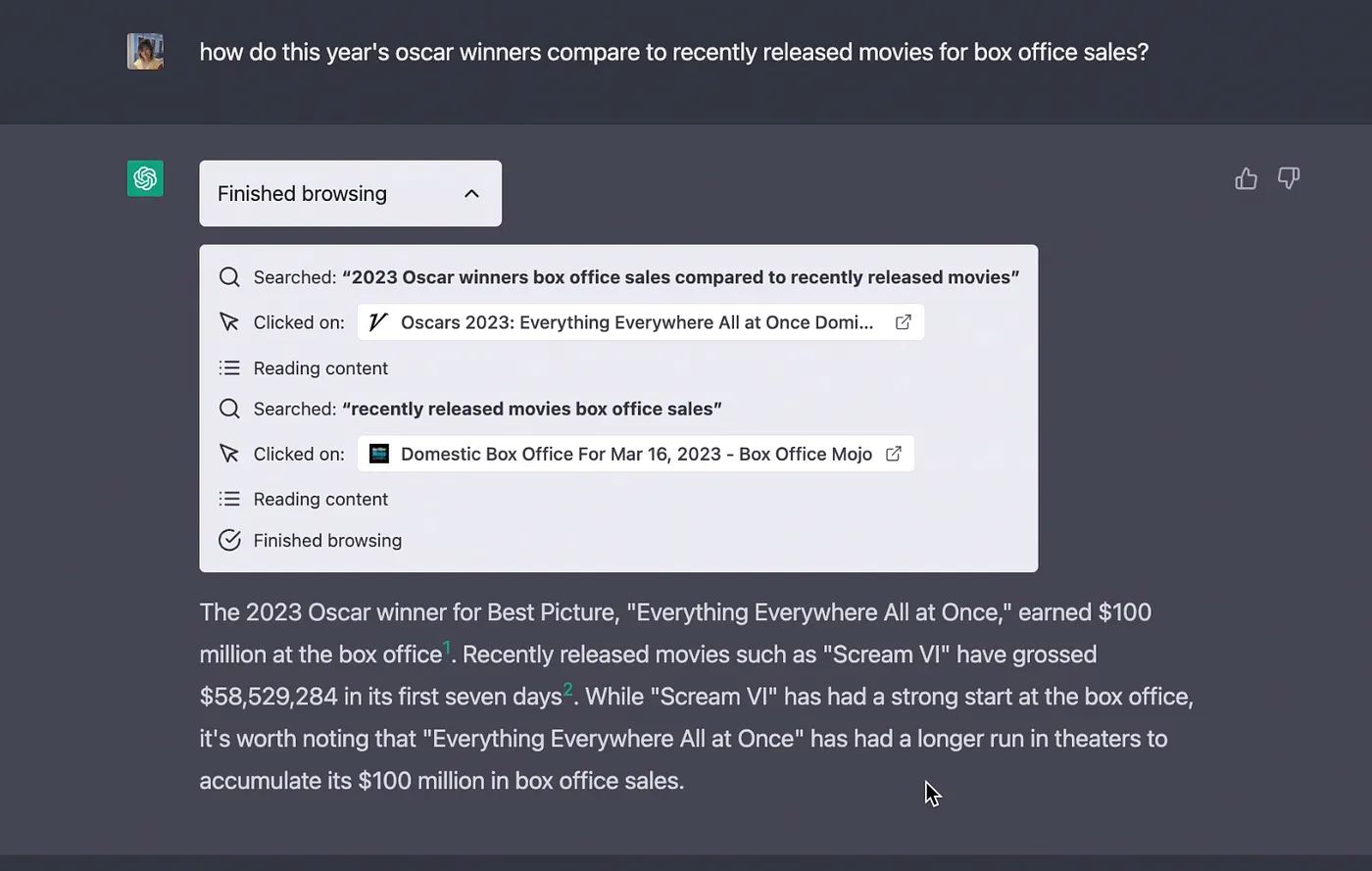

This month, OpenAI presented a new feature for ChatGPT: a plugin system. According to the released material, ChatGPT can now be extended and perform new tasks such as:

- Retrieve real-time information — sports scores, stock prices, latest news, etc.

- Retrieve knowledge-based information — company docs, personal notes, etc.

- Perform actions on behalf of the user — booking a flight, ordering food, etc.

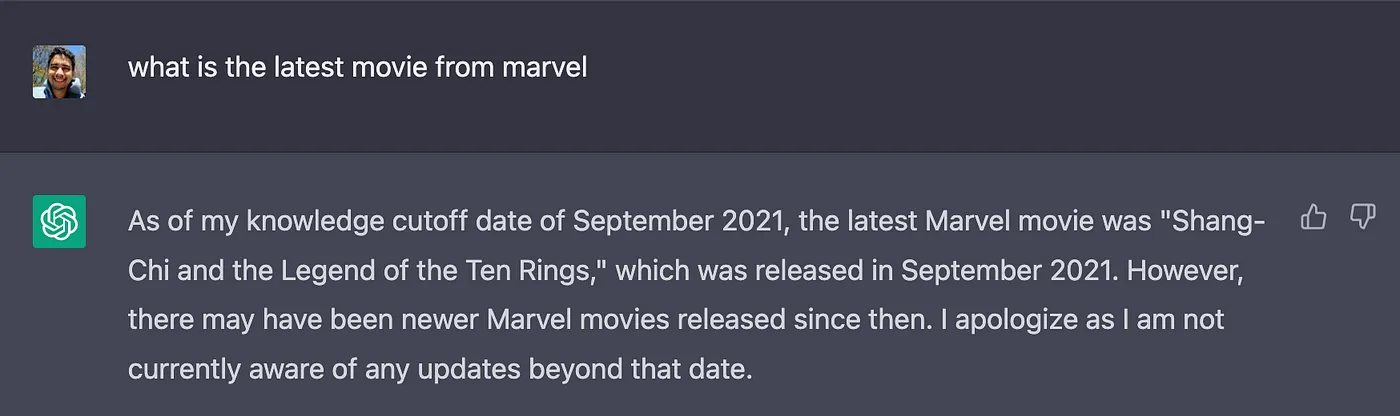

By utilizing these plugins, ChatGPT can now search the web for up-to-date answers. It removes the constraint of relying solely on its knowledge base, which was trained with data up to September 2021.

Create your own plugins

OpenAI has also allowed any developer to create their own plugins. Although, at the moment, developers need to join a waitlist, the documentation for creating the plugins is already available.

You can find here more information about how the plugin flow works, and here you can find some sample codes.

Unfortunately, the documentation only shows how integrating third-party APIs and ChatGPT works. If you are curious as I am, you are probably wondering how the inner details of this integration work. You may be wondering:

“How is it possible for a large language model to perform actions rather than outputting text when they were not trained for that?”

So, this is what we are going to dive into now.

Enter LangChain

LangChain is a tool developed by Harrison Chase (hwchase17) in 2022. Its goal is to assist developers in integrating third-party applications into large language models (LLMS).

To explain how it works, I’ll use one of their examples.

import os

os.environ["SERPAPI_API_KEY"] = "<your_api_key_here>"

os.environ["OPENAI_API_KEY"] = "<your_api_key_here>"

from langchain.agents import load_tools

from langchain.agents import initialize_agent

from langchain.llms import OpenAI

# First, let's load the language model we're going to use to control the agent.

llm = OpenAI(temperature=0)

# Next, let's load some tools to use. Note that the `llm-math` tool uses an LLM, so we need to pass that in.

tools = load_tools(["serpapi", "llm-math"], llm=llm)

# Finally, let's initialize an agent with the tools, the language model, and the type of agent we want to use.

agent = initialize_agent(tools, llm, agent="zero-shot-react-description", verbose=True)

# Now let's test it out!

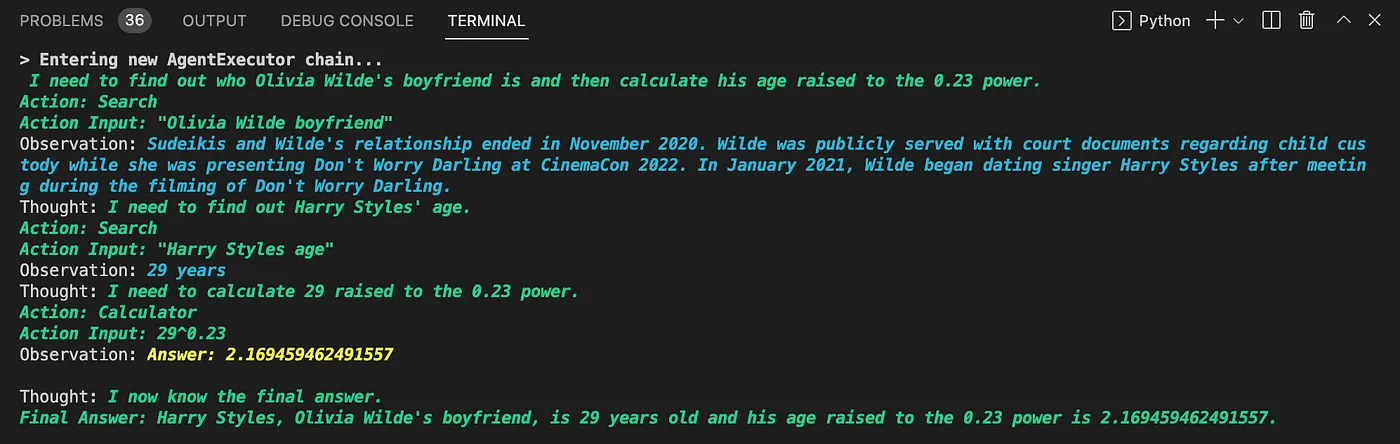

agent.run("Who is Olivia Wilde's boyfriend? What is his current age raised to the 0.23 power?")

From this example, we can see three main components:

- LLM: LLMs are a core component of LangChain. It helps the agent understand natural language. In this example, the default model from OpenAI was used. According to the source code, the default model is the text-davinci-003.

- Agent: Agents use an LLM to decide which actions to take and in what order. An action can either be using a tool and observing its output or returning a response to the user.

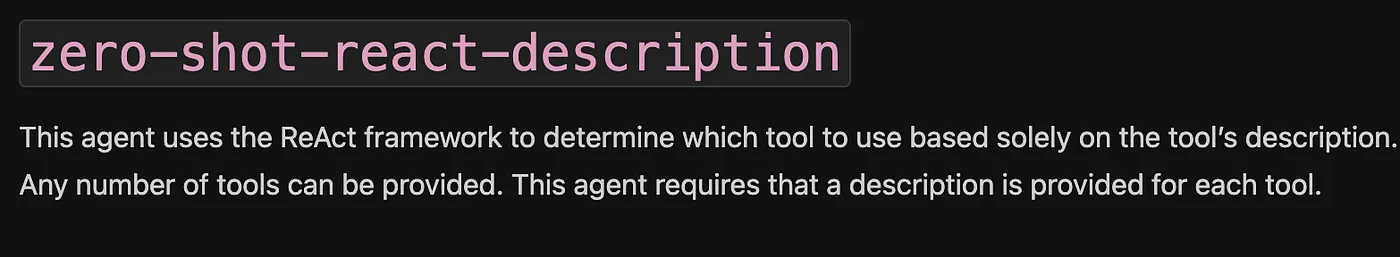

- We are using here zero-shot-react-description. From its documentation, we can understand that “This agent uses the ReAct framework to determine which tool to use based solely on the tool’s description.” We will use this information later :) - Tools: Functions that agents can use to interact with the world. In this example, two tools are used:

- serpapi: a wrapper around the https://serpapi.com/ API. It is used for browsing the web.

- llm-math: enables the agent to answer math-related questions such as “What is his current age raised to the 0.23 power?” in the prompt.

When running the script, the agent does several things such as browse who Olivia Wilde’s boyfriend is, extract his name, ask for Harry Style’s age, perform the search, and calculate 29^0.23, which is 2.16…., using the llm-math tool.

The great advantage of LangChain is that it is not dependent on a single provider, as presented in the documentation.

Why Do I Think LangChain Powers the ChatGPT Plugin System?

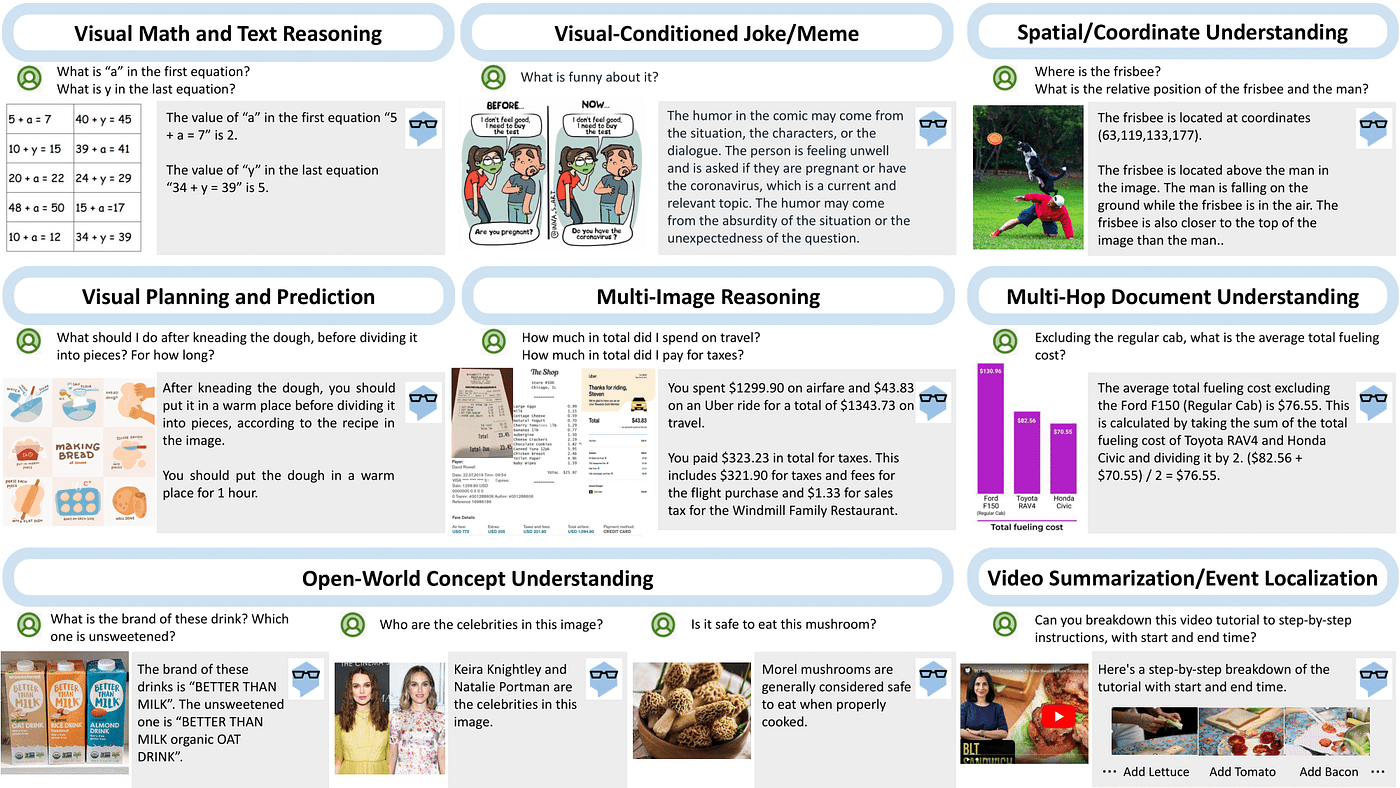

On March 21st, Microsoft, the strongest OpenAI partner, released MM-REACT: Prompting ChatGPT for Multimodal Reasoning and Action.

When looking at the capabilities of this “system paradigm,” as they call it, we can see that each example involves an interaction between a language model and some external application.

Image credit: https://multimodal-react.github.io/

Image credit: https://multimodal-react.github.io/

By looking at the sample code provided, we can see that the implementation of de model+tools interaction was made using LangChain. The README.md file also states, “MM-REACT code is based on langchain.”

Combining this evidence, plus the following sentence from the ChatGPT plugins documentation, “The plugin description, API requests, and API responses are all inserted into the conversation with ChatGPT.” We can assume that the plugin system adds the different plugins as tools for the agent, which is ChatGPT.

It is also likely that OpenAI turned ChatGPT into an agent of the type zero-shot-react-description to support the plugins (the same type we saw in the example before). I say this because the description of the API is inserted into the conversation, which matches what the agent expects, as you can read in the excerpt from the documentation below:

Conclusion

Although the plugin system is not available to the users yet, we can use the released documentation and the implementation of the MM-REACT to understand what powers the ChatGPT plugin system.

See you in the next article! 😁